What are your least favorite software 'knobs'?

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

What are your least favorite software 'knobs'?

In another thread, I mentioned the P. J. Plauger essay 'The Knob on the Back of the Set'. It was a tongue in cheek piece in his 'Programming on Purpose' column in the journal Software Development, written for April Fools' Day from... uh, 1989 or so? I couldn't find a version of it online, but I am pretty sure it appears in one of the volumes of his Programming on Purpose anthology set.

Anyway, the piece used an analogy to the various knobs on older television sets with unintuitive labels such as 'Vert Hold', whose functions only exist because of some design compromise somewhere. He argued that the larger the language, and the more fingers which were stuck into the early parts of the language design, the more knobs they tend to have. He took aim specifically at the type resolution system in PL/1, the indirection model in Algol-68, and the package system in Ada-85.

So the question is, what 'knobs' have infuriated you in various languages, tools, systems, etc.?

I'll post the explanation of the Algol-68 malarky right after this.

Anyway, the piece used an analogy to the various knobs on older television sets with unintuitive labels such as 'Vert Hold', whose functions only exist because of some design compromise somewhere. He argued that the larger the language, and the more fingers which were stuck into the early parts of the language design, the more knobs they tend to have. He took aim specifically at the type resolution system in PL/1, the indirection model in Algol-68, and the package system in Ada-85.

So the question is, what 'knobs' have infuriated you in various languages, tools, systems, etc.?

I'll post the explanation of the Algol-68 malarky right after this.

Last edited by Schol-R-LEA on Tue Aug 07, 2018 2:11 pm, edited 2 times in total.

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

Re: What are your least favorite software 'knobs'?

So, Algol-68. I am going by memory on this, and getting it third hand from Plauger anyway, so bear with me.

Apparently, due to the committee dropping acid or something right before designing the pointer system, Algol-68 used direct semantics except with pointers, and eschewed obligatory indirection operators as clutter, favoring hinting mechanisms instead - ones which the compiler didn't need to follow, like the C register modifer or the Java System.gc() method.

The resulting 'knob' meant that in general you couldn't tell from a given statement if it were an indirection or not without understanding details about the variable declarations and the global context (!), and would often have to guess whether an assignment to a pointer variable would alter the variable, or the element it points to. Fun times (not fun times) for all.

They then went on to describe the resolution mechanism in completely unique and non-obvious terminology, without defining the terms adequately, leading to the infamous phrase "stirmly hipping to void" appearing in a supposedly sober and thoughtful official language definition document.

For the record, it apparently means to give a type hint - one that is 'stirm', that is, between 'firm' and 'strong' in terms of whether the compiler needs to follow it - that a series of multiple indirections should be snapped (a 'hop, skip, and jump') with the result coerced to a void pointer (that is to say, an untyped pointer; this is probably where Stroustrup got the term as used in C++, which then got back-ported to C by the 1988 ANSI committee). So now you know. Brain bleach can be obtained at the following locations...

Apparently, due to the committee dropping acid or something right before designing the pointer system, Algol-68 used direct semantics except with pointers, and eschewed obligatory indirection operators as clutter, favoring hinting mechanisms instead - ones which the compiler didn't need to follow, like the C register modifer or the Java System.gc() method.

The resulting 'knob' meant that in general you couldn't tell from a given statement if it were an indirection or not without understanding details about the variable declarations and the global context (!), and would often have to guess whether an assignment to a pointer variable would alter the variable, or the element it points to. Fun times (not fun times) for all.

They then went on to describe the resolution mechanism in completely unique and non-obvious terminology, without defining the terms adequately, leading to the infamous phrase "stirmly hipping to void" appearing in a supposedly sober and thoughtful official language definition document.

For the record, it apparently means to give a type hint - one that is 'stirm', that is, between 'firm' and 'strong' in terms of whether the compiler needs to follow it - that a series of multiple indirections should be snapped (a 'hop, skip, and jump') with the result coerced to a void pointer (that is to say, an untyped pointer; this is probably where Stroustrup got the term as used in C++, which then got back-ported to C by the 1988 ANSI committee). So now you know. Brain bleach can be obtained at the following locations...

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

-

StudlyCaps

- Member

- Posts: 232

- Joined: Mon Jul 25, 2016 6:54 pm

- Location: Adelaide, Australia

Re: What are your least favorite software 'knobs'?

<flamebait>Unix, basically all of it.</flamebait>

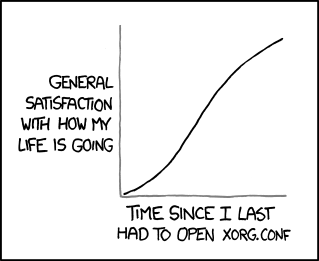

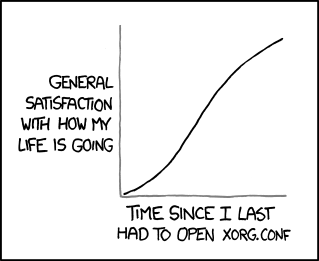

Seriously though, XServer and specifically the xorg.conf file.

Excerpt from the xorg.conf man page:

Basically

Seriously though, XServer and specifically the xorg.conf file.

Excerpt from the xorg.conf man page:

It's a weird relic of a past where dumb (or thin) clients were the norm and it's just utter rubbish for setting up a desktop environment, the fact that there are still no real alternatives (afaik) is mind blowing. The Nvidia GIU for it is worse than useless too.Videoadaptor Section

Nobody wants to say how this works. Maybe nobody knows ...

Basically

Re: What are your least favorite software 'knobs'?

The polar opposite of "too many fingers to early in the design" is "things we did not even think about when we first tried to nail this down", with the inevitable incompatible expansions slapped on afterwards...

-----

This one was very much my own fault, but due to the environment in which I stumbled across it, this nice trap took some serious debugging before I figured it out.

I love C++ for being the unapologetically ugly beast it is. But there's something to be aware of when you're mixing C and C++ (as so many intermediate C++ coders do):

References and variadic functions don't mix.

This is actually made explicit in the standard text, but who ever reads that?

So, let's say you want to write (for whatever reason) a "C++ printf":

This won't work. What va_start() does is (assuming a stack architecture), point ap at the last "known" parameter on the stack. Subsequent calls to va_arg() provide a type, so the compiler knows the size of the requested object, moves the stack pointer (ap) accordingly, and returns the value.

This works fine if the parameter is a data object. It also works fine if the parameter is a pointer.

But a reference is "an alias for the thing referenced"... and that resides in the caller's stack frame:

The "format" in the scope of cppprintf(), being a reference, is an alias for "f" in main(). That means that the address of "format" is not in the stack frame of cppprintf(), but in the stack frame of main()... and calling va_arg( x, int ) in cppprintf() will not give you a 42...

-----

Another part that is true to my "polar opposite" mentioned above is the whole subject of internationalization. By the time people realized how far that particular rabit hole goes, we were already nailed down by single-character toupper() / tolower() functions, inconsistent definitions of what wchar_t / "wide" strings are supposed to be, locale functions that only almost do what they advertise to be doing, and the general believe that "en_US" or "de_DE" is sufficient to set any desired "locale-specific behaviour"...

-----

This one was very much my own fault, but due to the environment in which I stumbled across it, this nice trap took some serious debugging before I figured it out.

I love C++ for being the unapologetically ugly beast it is. But there's something to be aware of when you're mixing C and C++ (as so many intermediate C++ coders do):

References and variadic functions don't mix.

This is actually made explicit in the standard text, but who ever reads that?

So, let's say you want to write (for whatever reason) a "C++ printf":

Code: Select all

#include <cstdarg>

// SERIOUSLY BROKEN CODE

void cppprintf( std::string & format, ... )

va_list ap;

va_start( ap, format );

// ...

}

This works fine if the parameter is a data object. It also works fine if the parameter is a pointer.

But a reference is "an alias for the thing referenced"... and that resides in the caller's stack frame:

Code: Select all

int main()

{

std::string f = "%d";

double d = 3.14;

int i = 42;

cppprintf( f, i );

return 0;

}

-----

Another part that is true to my "polar opposite" mentioned above is the whole subject of internationalization. By the time people realized how far that particular rabit hole goes, we were already nailed down by single-character toupper() / tolower() functions, inconsistent definitions of what wchar_t / "wide" strings are supposed to be, locale functions that only almost do what they advertise to be doing, and the general believe that "en_US" or "de_DE" is sufficient to set any desired "locale-specific behaviour"...

Every good solution is obvious once you've found it.

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

Re: What are your least favorite software 'knobs'?

True, true. While committee-built camels are an easy target to slam, lack of foresight on the part of s single designer or small design group tends to get sticky, as it not only ends up with a whiff of de gustibus non disputandum if you can't point to an objective fault, it also tends to be taken a lot more personally by the designers and/or their defenders (which I suppose brings us back to Python, as per the thread I'd hoisted this topic from).Solar wrote:The polar opposite of "too many fingers to early in the design" is "things we did not even think about when we first tried to nail this down", with the inevitable incompatible expansions slapped on afterwards...

Ugh, yes. The only thing harder and messier than internationalization is the closely related topic of time and date calculations, which often feels like a bottomless pit. Even if we defend the developers of (say) Unix on the basis that they didn't expect the system they cobbled together to play Space Travel and run TROFF print runs on would escape Bell Labs, never mind expecting that it would become an international quasi-standard whose descendants are still going fifty years later, or point out that the old National Standards Board didn't anticipate ASCII being used outside of the US, it doesn't really change the fact that the attempts to fix it with things such as Unicode have only made a bigger mess.Solar wrote:Another part that is true to my "polar opposite" mentioned above is the whole subject of internationalization. By the time people realized how far that particular rabit hole goes, we were already nailed down by single-character toupper() / tolower() functions, inconsistent definitions of what wchar_t / "wide" strings are supposed to be, locale functions that only almost do what they advertise to be doing, and the general believe that "en_US" or "de_DE" is sufficient to set any desired "locale-specific behaviour"...

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Re: What are your least favorite software 'knobs'?

Err... I think that Unicode was a huge step in the right direction, and is definitely the way to go... the pity is that most existing API's don't really cater for the complexity that full Unicode support brings, and that too many developers still don't really understand what would be required. So they "make do" with stuff like "x - 'A'" and converting strings tolower() before comparing, thinking that it gives them case-insensitive comparison... when it really, really does not.

Full disclosure, I am currently (as in, right now) digging through The Unicode Standard in order to get it "as right as possible" in PDCLib...

Full disclosure, I am currently (as in, right now) digging through The Unicode Standard in order to get it "as right as possible" in PDCLib...

Every good solution is obvious once you've found it.

Re: What are your least favorite software 'knobs'?

Hi,

Cheers,

Brendan

Unicode was a huge step in the right direction, and then the Unicode consortium started behaving like teenagers at their first happy hour and slapping in things like emoticons and Klingon into the spec in a bizarre attempt to get the cool kids to like them. It's getting hard to think of it as a serious international standard when every update is more silly nonsense.Solar wrote:Err... I think that Unicode was a huge step in the right direction, and is definitely the way to go...

Cheers,

Brendan

For all things; perfection is, and will always remain, impossible to achieve in practice. However; by striving for perfection we create things that are as perfect as practically possible. Let the pursuit of perfection be our guide.

Re: What are your least favorite software 'knobs'?

Klingon isn't in the spec, and emoji were an important part of supporting the Japanese mobile phone market - and they made internationalization and proper wide character support popular in the wider consciousness.Brendan wrote:Unicode was a huge step in the right direction, and then the Unicode consortium started behaving like teenagers at their first happy hour and slapping in things like emoticons and Klingon into the spec in a bizarre attempt to get the cool kids to like them. It's getting hard to think of it as a serious international standard when every update is more silly nonsense.

Unicode has actual mistakes you can deride, like the fiasco that is Han Unification.

Re: What are your least favorite software 'knobs'?

I know we're hijacking the thread here (perhaps splitting off the Unicode discussion would be an idea), but since I am currently actively working on the subject, I am engaged...

I've heard that one-liner criticism voiced often, and sure there were issues involved -- as were with several other scripts that were in use long enough to involve significant glyph variations. A kind of "the way they did it has problems". But I have yet to hear someone coming up with any "how it should have been done" that doesn't also have its share of problems.klange wrote:Unicode has actual mistakes you can deride, like the fiasco that is Han Unification.

Every good solution is obvious once you've found it.

Re: What are your least favorite software 'knobs'?

Han Unification was a mistake from the beginning founded on a false premise that there weren't enough codepoints to go around, and that Chinese hanzi, Japanese kanji, and Korean hanja were actually the same script. There were several preferable alternatives to the Han Unification, both at the time and invented later. The most obvious was just not doing it - giving Chinese, Japanese, and Korean their own codepoints, based on the existing national standards for text used in their countries of origin. Much of the criticism towards the unification derives from its inconsistency - why are traditional and simplified Chinese separated? Why are several assorted characters separated anyway even when they have virtually equivalent glyph renditions? Why are some drastically different glyph renditions merged? One of the stated goals of Unicode was to be able to represent all of the worlds languages, but while I can put English and German text in a single plain-text document, I can not do that with Chinese and Japanese without appending additional metadata outside the scope of Unicode. It took a decade from the time the CJK scripts were added for the Consortium to introduce a solution within Unicode itself, but support for variant selectors never happened - it was too late. Ultimately, the only saving grace for Han unification was language metadata, which is the only way Chinese and Japanese text can exist side-by-side in HTML.Solar wrote:I've heard that one-liner criticism voiced often, and sure there were issues involved -- as were with several other scripts that were in use long enough to involve significant glyph variations. A kind of "the way they did it has problems". But I have yet to hear someone coming up with any "how it should have been done" that doesn't also have its share of problems.

Re: What are your least favorite software 'knobs'?

Hi,

Cheers,

Brendan

I think this is more of a historical mess:klange wrote:Han Unification was a mistake from the beginning founded on a false premise that there weren't enough codepoints to go around, and that Chinese hanzi, Japanese kanji, and Korean hanja were actually the same script.Solar wrote:I've heard that one-liner criticism voiced often, and sure there were issues involved -- as were with several other scripts that were in use long enough to involve significant glyph variations. A kind of "the way they did it has problems". But I have yet to hear someone coming up with any "how it should have been done" that doesn't also have its share of problems.

- Late 1980s: Unicode consortium decided to have a 16-bit character set

- Late 1990s: Microsoft adopted UCS-2

- ????: Unicode consortium felt pressured into sticking everything that matters into the 16-bit UCS-2 space so they don't break existing code (Windows), and came up with Han unification (with less commonly used stuff shoved into "extension" ranges outside of the UCS-2 space)

- 2000s: Microsoft and everyone else switched to UTF-16 anyway (with a much larger range of representable code points); making Han unification seem silly in hindsight

Cheers,

Brendan

For all things; perfection is, and will always remain, impossible to achieve in practice. However; by striving for perfection we create things that are as perfect as practically possible. Let the pursuit of perfection be our guide.

- Schol-R-LEA

- Member

- Posts: 1925

- Joined: Fri Oct 27, 2006 9:42 am

- Location: Athens, GA, USA

Re: What are your least favorite software 'knobs'?

I am guessing that the posts about Unicode here will get Jeffed out to a new thread by the mods (please do), but I wanted to mention this discussion on the Daily WTF's fora (warning, NSFW) from shortly before I stopped posting there in order to safeguard my sanity.

This was actually one of the less violent and flamey threads on that group, with plenty of well-considered opinions. Go figure. In light of the amount of trollery that did occur in that thread, this should tell you a lot about why I left (though raised the question of why I was there in the first place). Thoughtful (about technical problems, that is), knowledgeable, and witty jerks are still jerks, which I suppose could be applied to myself as well (assuming one considers me thoughtful, knowledgeable, or witty, which I hope is the case but I wouldn't be surprised if most don't).

Note that I agree that Unicode is an improvement over ASCII, and indeed the point of that thread was that someone whose opinion I respect (Ted Nelson) had stated that in email that Unicode was a cultural disaster (the exact quote was, "Unicode has demolished cultures"), and I was asking for ideas as to why he said this (he hadn't given me much of any answer at that point). I knew that he'd been in Japan in the late 1990s, and I got the impression (which other seemed to corroborate) that his view was colored by resentment over the move from the existing national encodings to one which was seen as culturally insensitive, so I do think he placed far too much weight on that experience.

(Ted never did give me a direct answer on his opinions, but just pointed me to one of his books, Geeks Bearing Gifts, which I have yet to read. To be fair, it isn't as if he has any reason to see me as anything other than a stranger asking odd questions. While we did meet a few times, it's been about fifteen years since we last spoke.)

One point I made that is possibly important (for those who don't want to wade through that quagmire) is that the idea of a single, linear, and stateless encoding doesn't fit a lot of languages well. For example:

As some on the thread pointed out, technological issues with characters isn't a new thing; the examples given include two from English, namely the discarding of the 'thorn' ('þ') and eth ('ð') due to them not being available in the metal typefaces imported from Europe in the 16th century², and the similar discarding of the medial 's' ('ſ') in the late 18th century³.

One could look even further back, to the reasons why some languages have left-to-right, top-to-bottom ordering, others have right-to-left/top-to-bottom, still others have boustrophedonic orderings⁴ (either consistently or depending on the writing) and yet others have top-to-bottom ordering (which could then go either right-to-left or left-to-right). For example, Chinese top-to-bottom, right-to-left ordering appears to have originated from the use of bamboo slips strung into books which were sewn into rolls or folded codices, whereas in cuneiform, the original top-to-bottom ordering became right-to-left as a way of avoiding smudging of the clay tablets - while it is by no means certain that this carried over to proto-Hebrew and proto-Arabic writing forms, the theory that it did is fairly widespread (though it seems unlikely given that they both came more from the unrelated Phoenician abjad and Phoenician was right-to-left too). How Greek and Latin, which both were based on Phoenician, came to reverse the order ins't known, IIUC.

I seem to recall some arguing that the Phoenician ordering relates to Egyptian, where it was used because of the manner in which their mural art and hieroglyphs work, but that seems unlikely given that there is no direct connection between the Phoenician and Egyptian writing forms. In any case, the claim regarding Egyptian is incorrect - the order of reading in Egyptian varied by context (with some words read in one direction and some in another), medium (papyrus writing was sometimes, but not always, top to bottom, while inscriptions and murals depended on the layout of the wall or monument), and era.

footnotes

1. Somewhat artificially, from what I've heard, but that also goes towards the political reasons for which first the Nationalist government, and then the Communist government, each introduced their own 'simplified' scripts - while the main reason was to promote literacy, without having to submit to an outside cultural influences while doing so, they also wanted to make it clear that they were in charge of everything, including the language.

2. Hence the use of 'Ye' for 'the' in some old documents, which is famously retained by the storefront signs of some traditional pubs (and tony boutiques).

3. In part because it was used inconsistently, in part because it was hard to distinguish from lowercase 'f' in most typefaces, but mostly because it was just too annoying for the typesetters to bother with, being a distinct exception in English - especially at a time when the rules about capitalization were still taking shape - which generally doesn't have positional letter form variations based on the position in the word after the first letter.

4. Alternating left-to-right and right-to-left per line. The term can be translated from Greek roughly something like 'in the manner of an ox plow'.

This was actually one of the less violent and flamey threads on that group, with plenty of well-considered opinions. Go figure. In light of the amount of trollery that did occur in that thread, this should tell you a lot about why I left (though raised the question of why I was there in the first place). Thoughtful (about technical problems, that is), knowledgeable, and witty jerks are still jerks, which I suppose could be applied to myself as well (assuming one considers me thoughtful, knowledgeable, or witty, which I hope is the case but I wouldn't be surprised if most don't).

Note that I agree that Unicode is an improvement over ASCII, and indeed the point of that thread was that someone whose opinion I respect (Ted Nelson) had stated that in email that Unicode was a cultural disaster (the exact quote was, "Unicode has demolished cultures"), and I was asking for ideas as to why he said this (he hadn't given me much of any answer at that point). I knew that he'd been in Japan in the late 1990s, and I got the impression (which other seemed to corroborate) that his view was colored by resentment over the move from the existing national encodings to one which was seen as culturally insensitive, so I do think he placed far too much weight on that experience.

(Ted never did give me a direct answer on his opinions, but just pointed me to one of his books, Geeks Bearing Gifts, which I have yet to read. To be fair, it isn't as if he has any reason to see me as anything other than a stranger asking odd questions. While we did meet a few times, it's been about fifteen years since we last spoke.)

One point I made that is possibly important (for those who don't want to wade through that quagmire) is that the idea of a single, linear, and stateless encoding doesn't fit a lot of languages well. For example:

- Hanzi character ordering is based on the radicals which make up the ideograms, and even if we treat Traditional and Simplified characters as separate sets¹, there is no single, linear ordering that is universally accepted. Also, most of these orderings are explicitly tabular, with relations in (at least) two dimensions.

- Japanese kanji - which is based on Traditional Hanzi, but has drifted and is not always the same as the modern versions of the 'traditional Hanzi' - has it's own approach to ordering the characters, and while I don't know the details, trying to use a Chinese ordering with Japanese, or vice versa, is going to cause problems.

- The Japanese Kana syllabary is also explicitly tabular in ordering, and forcing it into a linear form loses significant information about the relationships between the characters.

- A number of scripts - most notably Arabic - are stateful, in that different letters have different letter forms depending on their position in the word. I don't offhand know how this is solved in Unicode, so how much of a problem it is isn't clear to me. Perhaps someone else could speak up about it?

- Ligations and other kinds of merged digraphs are often a problem, though I gather it is mostly a solved one currently. I would expect Solar to be familiar with this problem (assuming it still is one) regarding the sharp-s character ('ß') in German.

As some on the thread pointed out, technological issues with characters isn't a new thing; the examples given include two from English, namely the discarding of the 'thorn' ('þ') and eth ('ð') due to them not being available in the metal typefaces imported from Europe in the 16th century², and the similar discarding of the medial 's' ('ſ') in the late 18th century³.

One could look even further back, to the reasons why some languages have left-to-right, top-to-bottom ordering, others have right-to-left/top-to-bottom, still others have boustrophedonic orderings⁴ (either consistently or depending on the writing) and yet others have top-to-bottom ordering (which could then go either right-to-left or left-to-right). For example, Chinese top-to-bottom, right-to-left ordering appears to have originated from the use of bamboo slips strung into books which were sewn into rolls or folded codices, whereas in cuneiform, the original top-to-bottom ordering became right-to-left as a way of avoiding smudging of the clay tablets - while it is by no means certain that this carried over to proto-Hebrew and proto-Arabic writing forms, the theory that it did is fairly widespread (though it seems unlikely given that they both came more from the unrelated Phoenician abjad and Phoenician was right-to-left too). How Greek and Latin, which both were based on Phoenician, came to reverse the order ins't known, IIUC.

I seem to recall some arguing that the Phoenician ordering relates to Egyptian, where it was used because of the manner in which their mural art and hieroglyphs work, but that seems unlikely given that there is no direct connection between the Phoenician and Egyptian writing forms. In any case, the claim regarding Egyptian is incorrect - the order of reading in Egyptian varied by context (with some words read in one direction and some in another), medium (papyrus writing was sometimes, but not always, top to bottom, while inscriptions and murals depended on the layout of the wall or monument), and era.

footnotes

1. Somewhat artificially, from what I've heard, but that also goes towards the political reasons for which first the Nationalist government, and then the Communist government, each introduced their own 'simplified' scripts - while the main reason was to promote literacy, without having to submit to an outside cultural influences while doing so, they also wanted to make it clear that they were in charge of everything, including the language.

2. Hence the use of 'Ye' for 'the' in some old documents, which is famously retained by the storefront signs of some traditional pubs (and tony boutiques).

3. In part because it was used inconsistently, in part because it was hard to distinguish from lowercase 'f' in most typefaces, but mostly because it was just too annoying for the typesetters to bother with, being a distinct exception in English - especially at a time when the rules about capitalization were still taking shape - which generally doesn't have positional letter form variations based on the position in the word after the first letter.

4. Alternating left-to-right and right-to-left per line. The term can be translated from Greek roughly something like 'in the manner of an ox plow'.

Rev. First Speaker Schol-R-LEA;2 LCF ELF JAM POEE KoR KCO PPWMTF

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Ordo OS Project

Lisp programmers tend to seem very odd to outsiders, just like anyone else who has had a religious experience they can't quite explain to others.

Re: What are your least favorite software 'knobs'?

It's not only Arabic; the Brahmic scrips (Devanagari etc.) and many others are similarily "morphing".Schol-R-LEA wrote:A number of scripts - most notably Arabic - are stateful, in that different letters have different letter forms depending on their position in the word. I don't offhand know how this is solved in Unicode, so how much of a problem it is isn't clear to me. Perhaps someone else could speak up about it?

Mostly a problem for the one implementing the rendering engine of the editor / web browser / application, i.e. outside the scope of Unicode (which encodes characters, not glyphs). The Unicode Database gives some helpful metadata, e.g. whether a cursive-script character is left-binding, right-binding, or both. How to go about actually typesetting the text is not up to Unicode, though they give recommendations and lots of examples for each individual script. Quite an interesting read, actually.

It very much depends on what you consider "a problem". Taking ß as an example, all the plumbing is there to convert it to either "SS" or uppercase "ß" (U+1E9E). Of course, the former just cannot be done using the C or C++ API and the latter only in "wide char" strings, and which one it should be is locale-specific (a so-called "tailoring" of the locale data), because it depends on the context in which you are doing the case conversion. The plumbing is also in place to have comparisons between e.g. "heiß" and "HEISS" result in a match (Unicode Collation Algorithm), but as most people still go through strcmp() / std::string::operator==(), that too is usually not used even where supported by the implementation.Ligations and other kinds of merged digraphs are often a problem, though I gather it is mostly a solved one currently. I would expect Solar to be familiar with this problem (assuming it still is one) regarding the sharp-s character ('ß') in German.

These solutions existed in Unicode for a long time. I think much of the perception of "brokenness" comes from the fact that the solutions are not simple, because they can not be simple.

Which is why I am digging into that standard in detail at this point (looking at implementing <locale.h> et al. for PDCLib). It's funny that two very obscure C functions -- strcoll() and strxfrom() -- require major parts of what ICU is doing to be implemented in a basic C library if you want to "do Unicode". This is not a fault of Unicode; it's a strength, because "simpler" solutions will simply not be correct.

What I am trying to say is, we have been lulled into a false feeling of safety by the existence of functions like strcmp(). The number of StackExchange posts that claim that a "case-insensitive string compare" can be done by tolower() followed by a bytewise comparison is disgusting. (Just for the record, there is no tolower() in the standard libraries that will work for UTF-8, and even if it would that would not be enough, just a 80-20 solution.)

Languages are a very complex matter, and locales are much more than just the ordering of elements in a date. Unicode does a rather comprehensive job at covering all these issues. Sometimes rather elegantly, sometimes not so much. But flaming it for "destroying cultures" because it's as "historically grown" as everything else in our line of work and implementations haven't caught up with the solutions Unicode does provide is really pushing it a bit far, especially as alternative "one size fits all" standards just don't exist (and thank goodness for that).

Last edited by Solar on Mon Aug 13, 2018 6:34 am, edited 2 times in total.

Every good solution is obvious once you've found it.

Re: What are your least favorite software 'knobs'?

FWIW, the whole Han Unification History is actually documented part of the Unicode Standard itself. As accounts in the Standard and expressed in this thread seem to differ a bit, I thought providing this link couldn't hurt.

Every good solution is obvious once you've found it.